Robots.txt is a crucial file for any website, especially when it comes to SEO. It acts as a set of instructions for search engine crawlers, telling them which parts of your website they can access and which they should avoid. Properly configured robots.txt rules ensure that search engines can crawl and index your site efficiently, while protecting sensitive or irrelevant content from being indexed.

However, errors in robots.txt can have significant consequences. Common mistakes like incorrect syntax, conflicting rules, or disallowing important pages can lead to crawling issues, missed content, and even penalties. Validating your robots.txt rules regularly is essential to maintain a healthy SEO profile.

This blog post introduces a practical tool that simplifies the validation process using Google Sheets. By leveraging the power of Google Sheets and a custom script, you can quickly and easily check your robots.txt rules against specific URLs. Our goal is to provide you with a straightforward method to ensure your robots.txt file is correctly set up.

Understanding robots.txt Basics (Quick Refresher)

Before diving into the validation tool, let’s quickly review the core components of robots.txt:

- User-agent: Specifies which crawler the rule applies to. For example,

User-agent: *applies to all crawlers. - Allow: Explicitly permits access to a specific URL or URL pattern.

- Disallow: Restricts access to a specific URL or URL pattern.

- Wildcards:

*matches any sequence of characters, and$matches the end of a URL. For example,Disallow: /images/*disallows all URLs starting with/images/.

Correct syntax is crucial. Even a small mistake can lead to unintended consequences, so it’s important to validate your rules regularly.

Introducing the Google Sheets Validation Tool

The Google Sheets Validation Tool is designed to validate URLs against your robots.txt rules directly within Google Sheets. It uses a two-tab setup:

- Robots.txt Rules: This tab is where you paste your robots.txt rules.

- Validate Robots: This tab is where you paste the URLs you want to check.

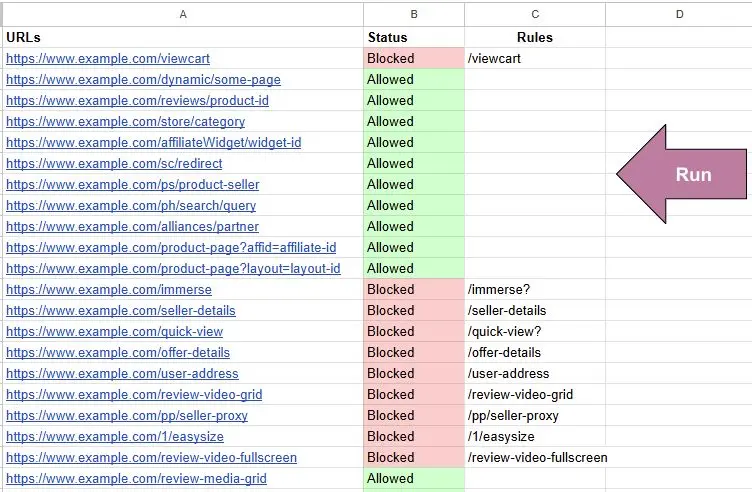

The script reads the rules from the “robots.txt Rules” tab, parses them, and then matches the URLs from the “Validate Robots” tab against these rules. The output indicates whether each URL is allowed or disallowed based on the rules.

How to Set Up the Google Sheet (Step-by-Step)

Step 1: Get the Template

To get started, click on this link to access the pre-made Google Sheet template: Google Sheet Template.

Step 2: Make a Copy

Once you have the template open, click “File” in the top menu, then, select “Make a copy” to create your editable version.

Step 3: Paste Your robots.txt Rules

Navigate to the “robots.txt Rules” tab. Here, you can paste your robots.txt rules. Ensure each rule is on a new line, just as it appears in your robots.txt file.

Step 4: Paste URLs to Test

Next, go to the “Validate Robots” tab. In column A, paste the URLs you want to check against your robots.txt rules.

Step 5: Run the Script

To run the script, follow the instructions in the next section on how to add the script to your Google Sheet.

How to Add the Script to Google Sheets

Step 1: Open the Script Editor

- In your Google Sheet, go to “Tools” in the top menu.

- Select “Script editor.”

Step 2: Paste the Script Code

In the Script editor, paste the following code:

function checkRobotsRules() {

var ss = SpreadsheetApp.getActiveSpreadsheet();

var checkerSheet = ss.getSheetByName("Validate Robots");

var rulesSheet = ss.getSheetByName("Robots.txt Rules");

if (!checkerSheet || !rulesSheet) {

Logger.log("One or both sheets are missing.");

return;

}

// Add headers to Columns B and C in the "Robots Checker" sheet

checkerSheet.getRange(1, 2, 1, 2).setValues([["Status", "Rules"]]);

var urls = checkerSheet.getRange("A2:A" + checkerSheet.getLastRow()).getValues();

var rulesCells = rulesSheet.getRange("A2:A" + rulesSheet.getLastRow()).getValues();

var rules = [];

// Extract rules from each cell in Column A of the "Robots Rules" sheet

rulesCells.forEach(function(cell) {

var cellValue = cell[0];

if (cellValue) {

var cellRules = cellValue.split(/\r?\n/).map(rule => rule.trim()).filter(rule => rule.startsWith("Disallow:"));

rules = rules.concat(cellRules);

}

});

var results = [];

var colors = [];

urls.forEach(function(urlRow) {

var url = urlRow[0];

if (!url) {

results.push(["", ""]);

colors.push(["white", "white"]);

return;

}

var blocked = false;

var matchedRule = "";

rules.forEach(function(rule) {

var rulePattern = rule.replace("Disallow:", "").trim();

var regexPattern = rulePattern.replace(/\*/g, '.*') + '$';

var regex = new RegExp(regexPattern);

if (regex.test(url)) {

blocked = true;

matchedRule = rulePattern;

}

});

results.push([blocked ? "Blocked" : "Allowed", matchedRule]);

colors.push([blocked ? "#FFCCCC" : "#CCFFCC", "white"]); // Red for Blocked, Green for Allowed

});

var resultRange = checkerSheet.getRange(2, 2, results.length, 2);

resultRange.setValues(results);

resultRange.setBackgrounds(colors);

}

Step 3: Save the Script

- In the Script editor, go to “File” in the top menu.

- Select “Save” to save your script.

Step 4: Run the Function

-

- In the Script editor, click on the play button (▶️) to run the

validateRobotsTxtfunction. - Alternatively, you can add a custom menu item to run the function directly from your Google Sheet:

- In the Script editor, go to “Extensions” > “Apps Script.”

- In the Apps Script editor, add the following code:

- In the Script editor, click on the play button (▶️) to run the

function onOpen() {

var ui = SpreadsheetApp.getUi();

ui.createMenu('Custom Menu')

.addItem('Validate Robots.txt', 'validateRobotsTxt')

.addToUi();

}

- Save the script and reload your Google Sheet. You should now see a “Custom Menu” with an option to “Validate Robots.txt.”

Tips and Best Practices

- Keep Your robots.txt Organized: Use comments and clear formatting to make your robots.txt file easy to read and maintain.

- Regular Validation: Regularly validate your robots.txt rules, especially after making changes to your website or robots.txt file.

- Understand Limitations: While this script covers basic validation, it may not handle more complex directives or edge cases. Consider using additional SEO tools for comprehensive validation.

- Combine with Other Tools: Use this Google Sheets tool in conjunction with other SEO validation tools for a more thorough check. You can use tamethebot robots checker

Conclusion

Validating your robots.txt rules is essential for maintaining a healthy SEO profile. By using this Google Sheets tool, you can quickly and easily check your robots.txt rules against specific URLs. This practical tool simplifies the validation process, ensuring that your website remains accessible to search engines while protecting sensitive content.

We encourage you to try out this tool and share your feedback. If you find it useful, please share this blog post with others who might benefit from it!